A guest post by Martin Polak.

Does this sound familiar to you …?

The software release date is approaching at a fast pace and the number of implemented changes is steadily growing – but the test progress is lagging behind because the test environments are not ready when needed. All this only because it is so cumbersome to deploy the software onto the test environments. This has many reasons: Developers are not available due to the release-stress, operations people are far too busy etc. Therefore, too many errors happen during the deployment and nobody really knows the root cause.

All this leads to the situation that, once again, there’s too little time left for reasonable testing. Maybe we get away with it this time, but what about the next releases? The problems are getting bigger and bigger, rather than vanishing. It’s like walking on the edge of a huge cliff.

And finally, the big day (often: the big night) has come and the new version is going to be released. Sweaty fingers rush over keyboards late at night, parameters being sent via messenger, information is extracted from long Excel lists and the sleepiness is fought with already-cold coffee. It is finally done early in the morning: The new version is published – hopefully without any errors.

Let’s Automate!

Wouldn’t it be nice to have this automated? Automic Automation has been around for a long time already, agents are distributed everywhere, so why isn’t this automated and scheduled?

Here’s the answer: You have to manage all the necessary parameters such as paths, passwords, environment variables, etc. in a maintainable and scalable way. This task easily gets too much to handle. What server is part of which environment? Where are the versions of the configuration files and which database schema is the right one? There are far too many applications, versions, servers and even more paths, passwords and URLs. All these parameters would have to be maintained in hundreds of nested VARAs. Who should keep the overview? Phew!

On top of that, these are responsibilities and processes of very different departments: development, QA / testing and operations. The “DevOps” approach propagates that these departments – development and operations – tackle these issues with common processes, incentives and tools.

CDA provides the solution: A clean web interface with the necessary structures to manage all these parameters and tools to map different processes, e.g. approvals.

Continuous … what?

But how to continue? How do you build a robust “Continuous Integration” pipeline with CDA and how do you extend that towards “Continuous Delivery”? And what does that mean anyway?

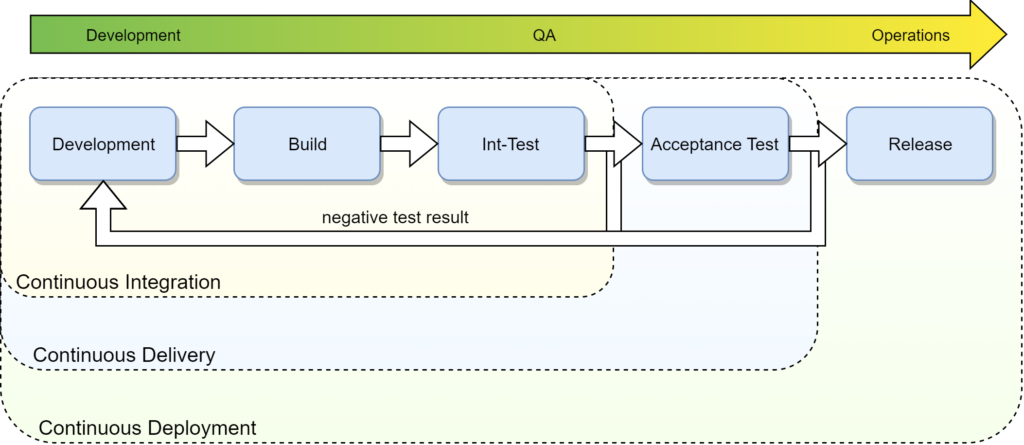

“Continuous Integration” means that the code parts of all development teams are combined (“integrated”) as often as possible (at least daily!) to ensure that they work well together or find out as early as possible if this is not the case.

In addition, “Continuous Delivery” means that at any time the developed and compiled package is ready to be published (even if it is not published). If you publish this version immediately (push it to production), it is called “continuous deployment”.

The ingredients

And how can you achieve Continuous Integration or Continuous Delivery? The following ingredients are required, some of them you already have anyways:

- A version-control system (e.g. GitLab, GitHub, etc.)

- A build server (e.g. Jenkins)

- A software repository that suits your needs (e.g. Sonatype Nexus, FTP etc.)

- A test automation tool (e.g., Tricentis Tosca)

- Broadcom’s Continuous Delivery Automation (CDA)

The recipe

How does all of that fit together?

For example, like that:

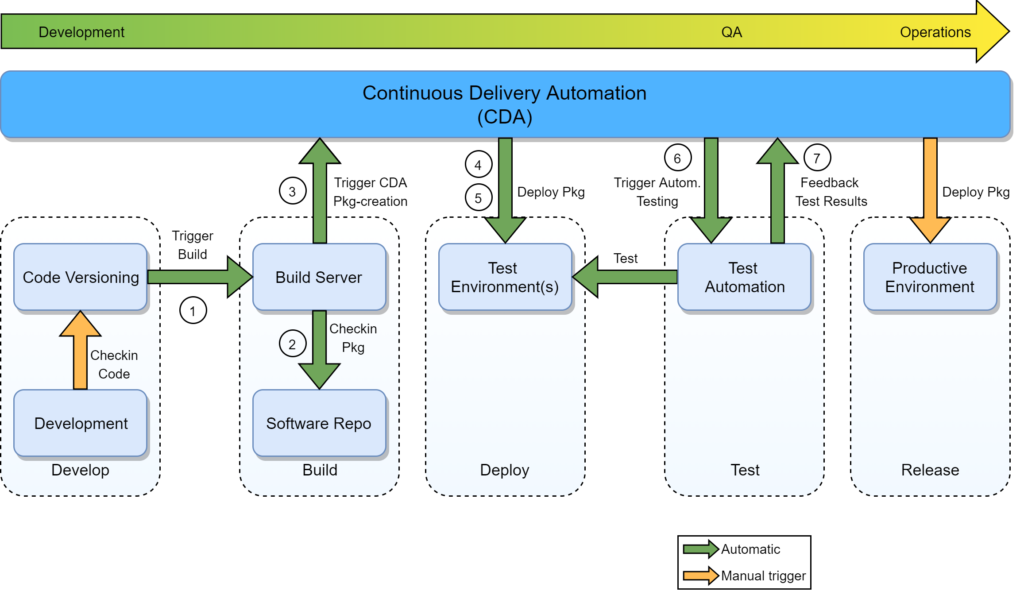

- As soon as a developer checks-in code to a development branch in the version-control system, the build server is automatically triggered (for example, via its REST API).

- The triggered build server compiles the code (into a “package”) and uploads the resulting artifact to the software repository.

- The build server then starts a workflow in CDA via the REST API, which takes the version number and other values as parameters in order to create a “package” (a version) for the corresponding application in CDA. Additionally, it sets all required parameters.

- Finally, this workflow starts the deployment workflow for this package to the test environment.

- The deployment workflow first downloads the required artifacts from the software repository and then performs all necessary steps on the servers of the respective (test) environment.

- In the final steps of this deployment workflow, the test automation tool is invoked, which in turn feeds the test results back to CDA.

- Upon completion, the developer (and / or other stakeholders) is informed by e-mail about all relevant pieces of information (test results, etc.).

What have we achieved now?

The developed code was compiled, uploaded into a repository and deployed by CDA onto a test environment where automated testing was performed. Finally, all stakeholders were informed about the relevant information.

From now on, at the push of a button in CDA, the same package can either be deployed on the next higher environment (e.g. acceptance test or production) or, in the case of failed test cases, rejected. The only manual step in this Continuous Delivery chain is the code check in the version-control system!

As this process is carried out so often (and without pain!), it’s becoming bulletproof. If the configurations of the pre-production environments correspond as closely as possible to those of the production environment, even with a “productive deployment” hardly anything can go wrong. The advantages are apparent:

- Frequent and early testing of the software (“shift left”), but also of the deployment process

- High degree of standardization and “self-documentation” through automation

- High scalability (the same workflow is used for all environments, some parts of them may even be used cross-application!)

- Minimal manual intervention

- Full audit trail

To summarize: a consistently higher quality of your applications and more relaxed employees!

Does this Sound interesting?

If you can relate to some of the challenges described and want to know how to overcome these issues in more detail, feel free to get in touch with me via one of the channels listed below!

Martin Polak

Martin Polak

Senior Consultant

Martin Polak is an expert in process automation, especially in the field of Continuous Everything with a focus on CDA/ARA and test automation. In his spare time you find him either in the deep snow or underwater.

Sixsentix is a company that focuses on software quality and efficiency via automation.