A guest article of André Lechner.

Every now and then, Automic administrators get a friendly reminder to cut costs.

Sooner or later, you end up with the topic of licensing costs. In my case, it was the licensing costs of Oracle databases. A quick look at the Automic compatibility list brings up some hope. PostgreSQL is supported since the AE version 12.2.x. It is a free database with virtually no licensing costs. Especially for us at Best blu Consulting with Energy GmbH where we follow open source as a company philosophy. And I already have some experience with PostgreSQL.

So how did I proceed with the migration?

Many roads lead to PostgreSQL

Before I start I make my first considerations: How do I get my data from the Oracle database to PostgreSQL?

There are three possibilities:

- Automic DBUnload and DBLoad

- Automic DBClientCopy

- Database side tools: In the Postgres community there are some tools that migrate whole Oracle database to Postgres. Very often you will find informations about ora2pg

Using Automic DBUnload and DBLoad is in my opinion the most pragmatic approach. All objects and definitions are taken over and the downtime is reasonable even for larger systems, if you do without the statistics and reports. The whole migration could be done while the system is running. However, the loss of statistics and reports is a major drawback.

I personally see Automic DBClientCopy as the best way. You can do a gradual migration and you are not forced to do everything in a certain time frame. Statistics and reports are preserved and the procedure is the same as with a normal DB client copy. You can rely on your own experience and roughly estimate how long something will take. On the other hand there is the effort to build up a parallel Automic system.

Database side tools like ora2pg are certainly also a good solution. However, I can’t write much about it, because I haven’t managed to take a closer look at this way yet.

The initial situation of our system

In order for you to better understand my approach and compare it with your situation, I have summarized the initial situation on our system.

- Linux server in the cloud with 4 CPU and 16GB RAM, as well as 150 GB HDD

- Linux CentOS version 7

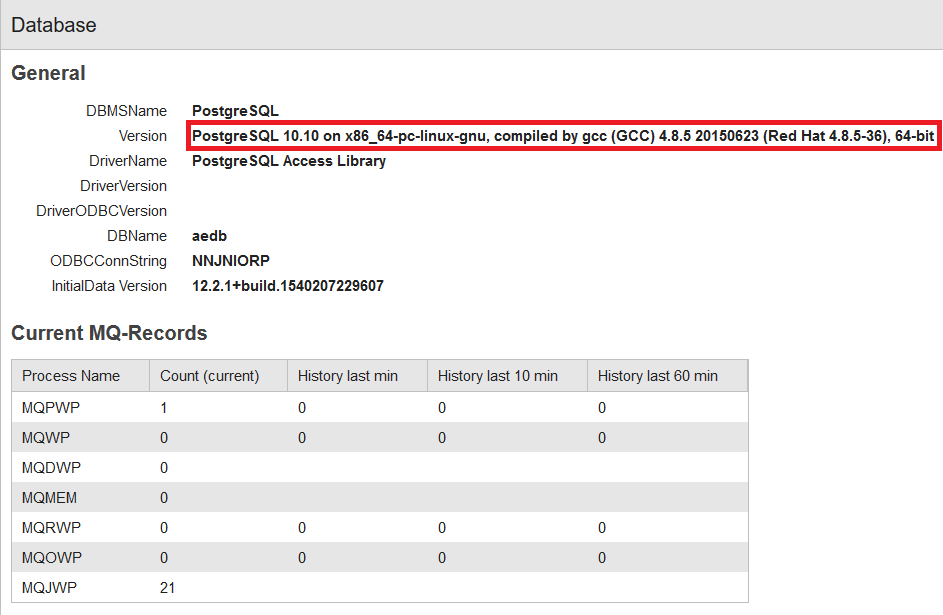

- AE Server in version 12.2.1

- Oracle database 12 without ILM and just under 20 GB content

- Clients: 20 pieces

- Number of objects per client: about 5000 pieces

With these prerequisites I decided to use DBUnload and DBLoad. With the rather small database size of only about 20GB, I think this is the fastest way to proceed. Also, the statistics were not relevant to me at this point.

For the whole action I had planned 4 hours and managed to get it done by that time. However, once you have gained some experience with the tools, it certainly goes faster.

Preparing the migration

I must mention for safety’s sake, as with an Automic Update/Upgrade it is always an advantage to have the Process Monitoring relatively empty. Also, a run of Archive/Reorg/Unload Automic Utility beforehand doesn’t hurt.

These are the steps I took to prepare for the migration:

- Download Postgres Server, Client and Contrib version 10

- Install graphical user interface (I used pgadmin4)

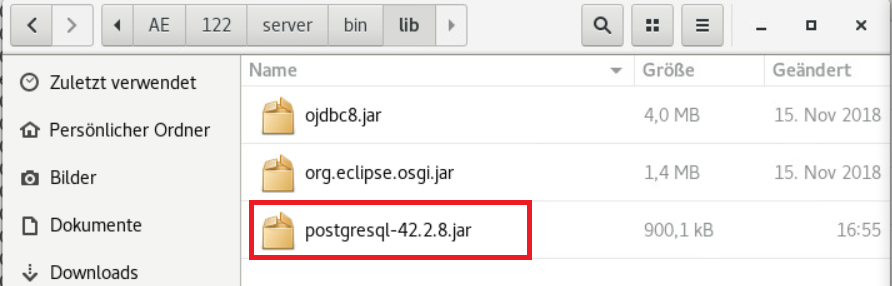

- Download appropriate JDBC driver for the AE and copy it to the correct directory

Set up initial database from postgres

Set up initial database from postgres- Enable AE server in postgresql.conf to md5

- Set up postgres10 service and start postgres

- Switch to the postgres system user postgres

- Set up according to Automic documentation: Recommendations for PostgreSQL

ATTENTION, Do not load initial data! - In the AE the necessary SQL Varas already on Postgres adapt

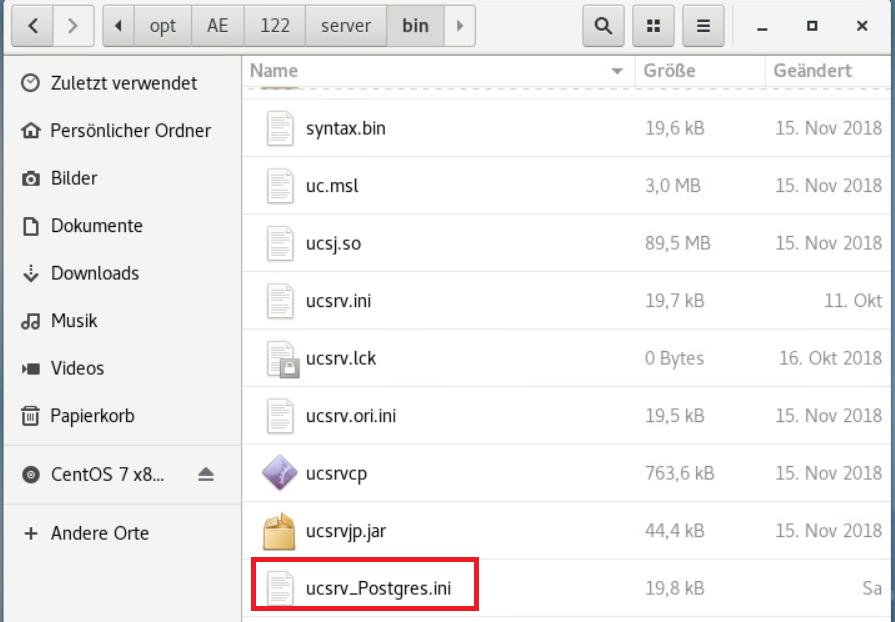

- Duplicate AE server ini file and adjust SQLConnection to Postgres database

Run reorg and archive with Automic utilities over AE database Oracle.

Run reorg and archive with Automic utilities over AE database Oracle.

Getting down to business: Migrating the data

After the preparation was complete, I started the actual data migration.

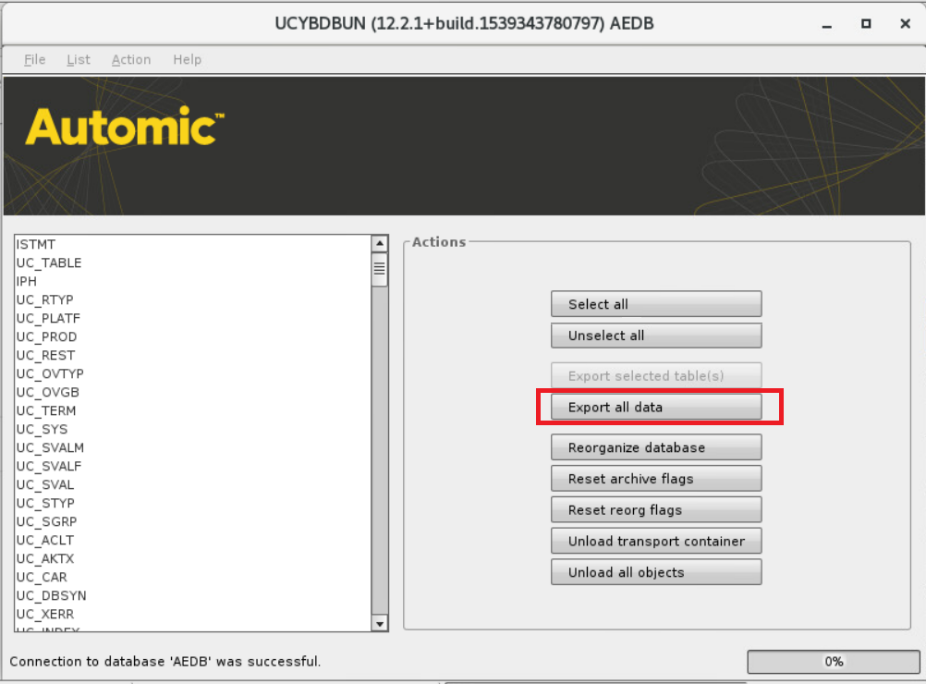

First, of course, I shut down AE via ServiceManagerDialog. After that I could start DBUnload and select “Export all Data”.

The result is a uc_data.txt which contains only the pure data of the Oracle database. In my case it was 1.5 GB of pure data.

ATTENTION, at this point a big obstacle is to be avoided. With a large database the step would lead in such a way, to the abort of the DBUnload, because the RAM simply fills up. To make the step work, the corresponding tables A*, R*, E* and MELD must not be unloaded. Furthermore the logging should be deactivated.

For the actual migration I did the following two steps:

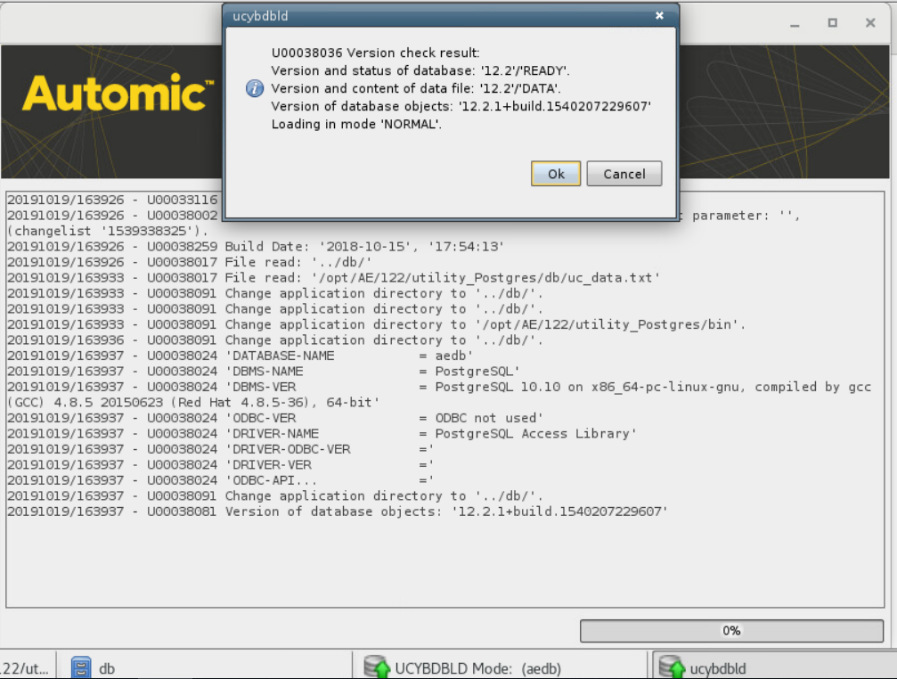

- First I executed the statements from uc_dll.sql, I did this with pgadmin4 directly to the database. In no case initial data must be loaded, otherwise the data chaos is inevitable. So check again if only schemas and stored procedures have been loaded.

- After that I loaded the unloaded data with DBLoad into the Postgres database.

This means that the migration is already complete. So I very quickly shut down the AE server and redirected to the Postgres database as follows:

- Saved the ucserv.ini and then renamed the ucserv_postgres.ini to ucserv.ini.

- Started the single WPs and CPs via ServiceManagerDialog, using the Cold-Start mode.

End of migration: All good?

I’ll be honest, I can’t answer the above question at all. In my personal opinion, I think it’s great to save license costs. However, it is also a new way to migrate databases, which certainly has its challenges.

On a productive environment, I would have lost statistics records and reports with the above approach. Everyone has to decide for themselves whether it is worth the migration.

I hope this little article has shown you that it is possible to migrate to PostgreSQL, and given you a few hints about it. With a little more time and (budget) you can accomplish the data migration relatively fast.

Have fun trying it out

André Lechner

André Lechner

André Lechner

Business Process Automation / Administration – Consultant

I am 41 years young and work for Best-blu consulting with energy GmbH as a Senior Consultant for Business Process Automation and Administration. I settled down in a quiet village in Lower Saxony and decided to go the old-fashioned way as a work scheduler EDV. I run Process Automation on various operating systems, including BS2000 Mainframe, z/OS and SAP. Because that didn’t fulfill my needs, I had the idea to additionally become an Automic Administrator. Since then, I have been dealing with updates and upgrades and have been pursuing this hobby for more than 10 years now.