Automic’s V12 was introduced on October 4 and brought us several innovations. I have already provided a brief overview of V12 shortly after its publication. In this article I introduce you to my most favorite new feature of V12: the Automic Analytics.

As preparation for my explanations in this article, I recommend you to watch the following Automic video. In this video, product manager Rémi Augé summarizes what Analytics are capable of and how they work. If possible, please view this video in full screen so that the text is legible.

Sie sehen gerade einen Platzhalterinhalt von YouTube. Um auf den eigentlichen Inhalt zuzugreifen, klicken Sie auf die Schaltfläche unten. Bitte beachten Sie, dass dabei Daten an Drittanbieter weitergegeben werden.

Mehr InformationenIn the meantime, I’ve been able to test Analytics extensively, but have also turned to an insider for support: Tobias Stanzel is software product designer at Automic and was responsible for the development of Automic Analytics.

Tobias has worked in software development for 15 years and has been with Automic for 4 years. His favorite leisure activity is spending time with his three children and being out in nature. Otherwise, he is busy with improving our favorite automation platform even further. His motto is: “Find out what customers need – then build the appropriate product.“

It’s been a great pleasure for me to chat with him about Automic Analytics and to get answers to some questions which were uppermost on my mind – and perhaps on yours, too.

A big thank you to Tobias for a great discussion and much useful and exciting background information.

The Architecture of Automic V12 Analytics

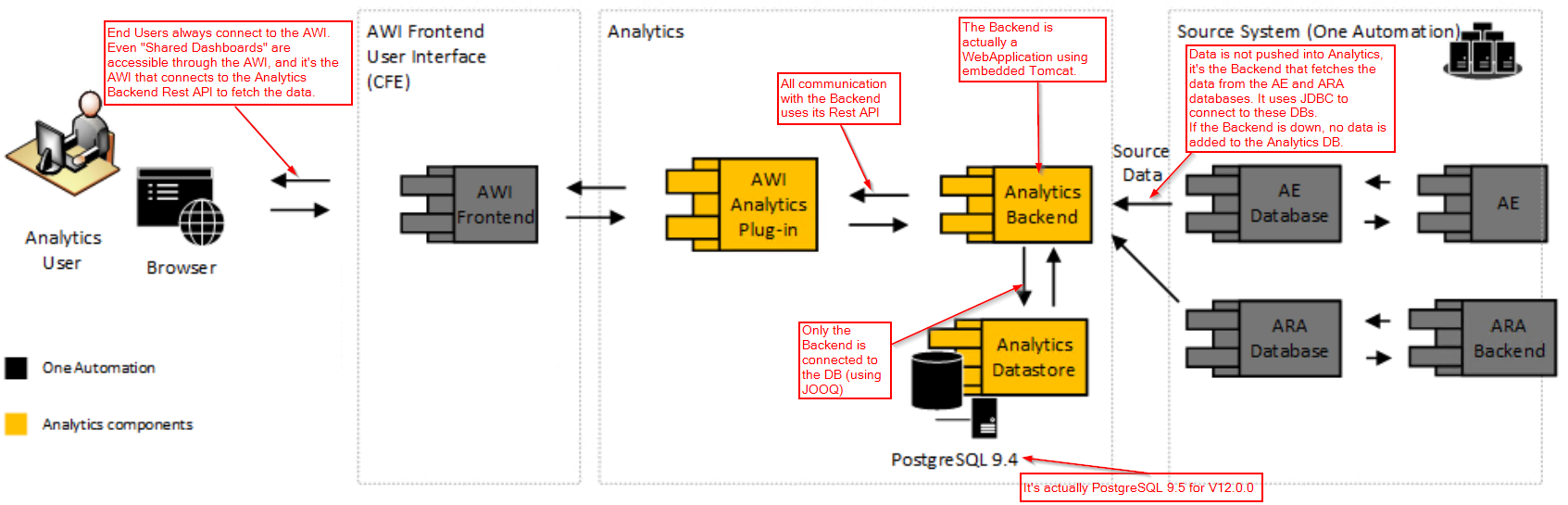

The V12 documentation contains the following graphic explaining the V12 Analytics (comments in red are mine). Especially one factor is striking: the Analytics Datastore is a PostgreSQL database.

When we met, I asked Tobias the obvious questions: Why PostgreSQL – and why PostgreSQL exclusively?

‘We wanted to offer a solution that is optimized to one database system. That reduces the complexity and allows us to fully utilize the functionality of the database. We didn’t have to limit ourselves to the lowest common denominator.

We also wanted to include the Datastore directly in our product. That’s why it had to be an open source database.

That it ended up being PostgreSQL was a purely technical decision

Automic will of course support you with setting up the Datastore, as PostgreSQL is being released as a part of the product.

The Analytics database was conceived as a local Datastore, there is no standard external access possible. We include data management tools in the form of an action pack. They make it possible to administrate Analytics without PostgreSQL knowledge.’

Setup and Installation of V12 Analytics

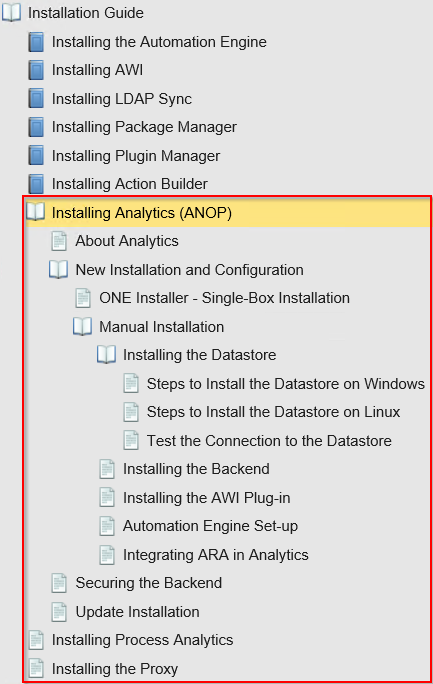

A detailed installation guide is available in the AE Administration Guide.

Here are a few additional notes from me:

- During setup of the Analytics Datastore you will be given an API key. You will have to add that later into the configuration file of the AWI plugin. If you lose the key, you can find it in the Analytics database under “key“, the only column in table api_key.

- The Analytics Datastore (the PostgreSQL installation) per default only permits local connections. That’s no problem as long as the Analytics backend runs on the same server as the database. If you don’t want to run the backend on the same server can find out how to change that in the installation guide under “Test the Connection to the Datastore“.

- Currently, Analytics supports only AE databases from Oracle and MS SQL Server as data sources; DB2 is not (yet?) supported.

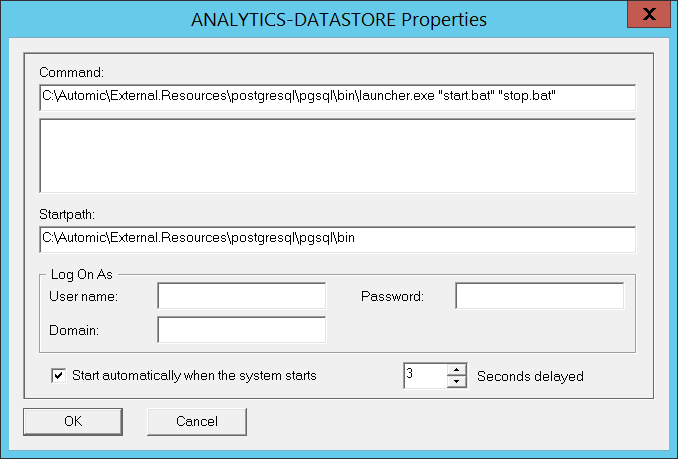

- I recommend adding not only the backend, but also the Datastore to the Automic Service-Manager. The screenshot shows a setup example for the Analytics Datastore in the Service Manager.

- The Analytics backend can also use SSL. Read more about this in the section Securing the Backend of the Administration Guide.

- Passwords in the configuration file “application properties“ can (and should) be encrypted the same way as in the file ucsrv.ini of the Automation Engine.

- When you enter or change the API key in the Analytics AWI plugin (in the configuration file plugin.properties) after you have installed the plugin, you need to restart the AWI Tomcat in order to save the configuration changes.

How is Data Moved from the AE into Analytics?

Tobias explained to me how the data are moved into the Analytics Datastore. The Analytics backend pulls the data from the AE and (optionally) ARA database. There are two different modes available to accomplish this: Normal Sampling Mode and Large Sampling Mode.

In Normal Sampling Mode in standard operation, the data of all tasks that have been completed in the last 6 minutes (= 5 minutes + 60 seconds) are pulled from the AE into the Analytics DB every five minutes. These time periods can be changed by two parameters:

- collector.normal_sampling_period.minutes – changes the time distance between samplings and the time period of the collected data. Default value: 5 minutes.

- collector.safety_margin.seconds – changes the additional time period during which data is collected. Default value: 60 seconds.

The AH_Timestamp4 is used as search criterion in the AE database.

Large Sampling Mode is used, whenever the oldest dataset is older than the normal_sampling_period. Large Sampling looks back into the past as far as the youngest dataset within the Analytics database. It samples all data that has been created since then. All data is received in packages with a maximum of 50,000 records.

If the youngest dataset in Analytics is older than 1 day, then data will be sampled day by day. Still every package only contains a maximum of 50,000 records.

The Large Sampling looks into the past for a maximum of 32 days. Older data are not collected.

Of course, this mode also allows you to change all important parameters:

- collector.large_sampling_period.days

Default value: 1 day - collector.initial_collect_before_now.days

Default value: 32 days - collector.chunk_size.rows

Default value: 50,000 lines

And by the way, you can also activate Chart Caching from the Analytics backend via the configuration file. In standard operation, the charts are recalculated each time to reflect the most recent data. In cases of large data volume, this can lead to significant loading times.

If caching is activated, you are not going to get the latest data illustrated right away, but there is no delay in the illustration of diagrams if you are navigating there.

Which Data are Moved from the AE into Analytics?

To date, Analytics models only three AE data sources: jobs, workflows and service fulfillment. For this, two AE tables are collected in Analytics:

- AH – the header table of the statistics sets

- LASLM – the monitoring table for service fulfillment (for the new object type SLO)

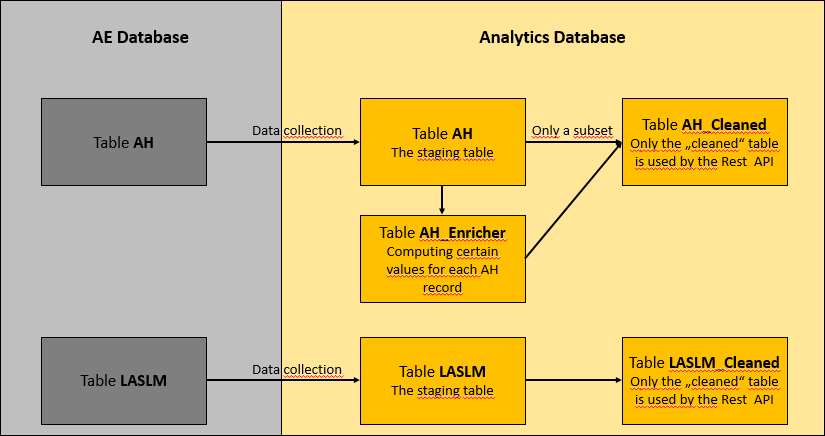

As shown in the following screenshot, the data are first copied 1:1 into staging tables. Afterwards they are enriched and normalized for the modeled tables AH_Cleaned and LASLM_Cleaned. These then become the basis for the Analytics.

The data in table LASLM are simply moved completely into LASLM_Cleaned and are then available for the Analytics.

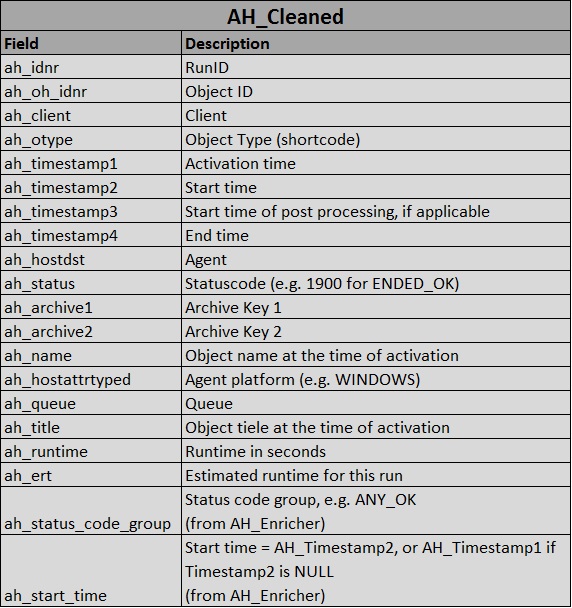

Things are a bit more complicated with table AH: Table AH in the Analytics DB still contains all fields of the AE table AH. Table AH_Cleaned, however, contains only 18 of the 129 columns of AH – but also 2 additional columns from AH_Enricher.

By the way, AH_Cleaned contains not just statistics of jobs and workflows, but also of almost all object types. However, only the two object types job and workflow have been modelled for Analytics. Therefore, only those two can be used.

Altogether, 20 fields in jobs and workflows are available for Analytics:

My Opinion: An Exciting Minimum Viable Product

In my first blog post on V12, I described the Automic Analytics as a “Minimum Viable Product“, but at the same time came out as an absolute fan.

Does that sound contradictory to you? Not at all:

Both the Analytics architecture and mode of implementation are totally exciting to me. They represent a great basis for Analytics to include more and more data in the future, step-by-step, and make them available for analysis.

To date, you can use only service fulfillments and 20 attributes of statistic sets of JOBS and Workflows for Analytics. That’s what I mean when I speak of a minimum viable product. I am sure that this will change quickly. After all, there is an enormous data treasure available in the AE that can be made available for analysis.

Next Steps for Automic Analytics

I asked Tobias Stanzel about future plans for Analytics and got a promising reply:

‘We have big plans for Analytics. At the moment, we are working in the area of data modelling in order to support additional objects. Additionally, we will continue to add more and more AE attributes into Analytics – for example, AV data in order to show object variables and the values of PromptSets.

Also, Actions are to be expanded, but at the moment I cannot give you details on that.

For me personally, the integration with AWI is also an important starting point for improvements. I think it would be cool to link to other AWI perspectives with an Analytics widget.

In any case, I can promise you: There will be much going on at Automic in the data area!’

In any case, I can promise you: There will be much going on at Automic in the ‘data‘ area!“

That sounds fantastic, don’t you think? I’m especially anxious to find out what the mysterious announcement in the last sentence is all about…

How’s it with you? Have you had a chance yet to experiment with Analytics? Do you have wishes and suggestions for the future? Let me know in the comments and I will forward all feedback to Automic.